Introduction to AI-Powered Scams: Definition & Impact

Why AI Scams Matter: Key Differences from Traditional Fraud

Scope & Financial Impact of AI-Driven Fraud (2024–2025)

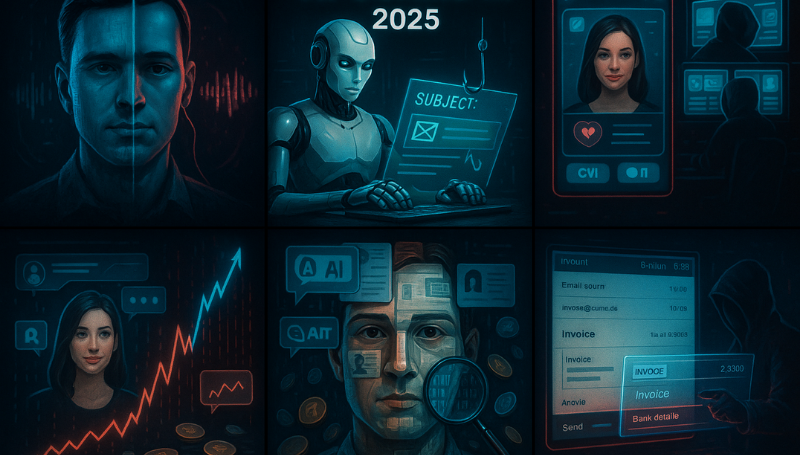

- Deepfake and Voice Cloning Scams

- AI-Generated Phishing and Conversational Phishing Explained

- AI-Powered Romance Scams: Synthetic Personas & Pig Butchering

- AI-Driven Investment Scams: Pump-and-Dump & Market Manipulation

- Synthetic Identity Fraud: How Fraudsters Create “Frankenstein IDs”

- AI-Enhanced Business Email Compromise (BEC)

Real-World AI Scam Cases (2024–2025)

- The Hong Kong Deepfake Heist (Arup Incident)

- UAE Corporate Voice Cloning Attack

- The UK Energy Firm CEO Fraud (Historical Context)

- Individual and Family-Targeted Scams

- Real-Time Deepfake Integration

- The Rise of Fraud-as-a-Service Toolchains

- Enhanced Evasion and Adaptivity

- Behavioral and Contextual Red Flags (For Individuals and Organizations)

- Technical Cues for Deepfakes and Voice Cloning

- Organizational and Technical Detection

- For Individuals: Practical Steps and Digital Hygiene

- For Organizations: Technical Controls and Policy Enhancements

- For Policymakers and Industry: Standardization and Regulation

Introduction to AI-Powered Scams: Definition & Impact

AI-powered scams are defined as fraudulent schemes that deliberately leverage Artificial Intelligence (AI) technologies such as generative AI, machine learning models, natural language processing (NLP), and computer vision to enhance the credibility, scale, personalization, or automation of deceptive practices. These fraudulent activities aim to target individuals or organizations, typically for financial gain or data theft.

Unlike traditional scams, which often rely on generic templates, poor grammar, and manual execution, AI-powered scams utilize advanced technology to create highly personalized, adaptive, and sophisticated deception campaigns.

Why AI Scams Matter: Key Differences from Traditional Fraud

The integration of AI into criminal operations represents a paradigm shift in cybercrime. AI has dramatically enhanced attackers’ capabilities across every dimension of scam execution, transforming scams from often-transparent attempts into sophisticated operations that challenge even security professionals.

The key differences between AI-powered and traditional scams include:

• Scale and Speed: Traditional scams are limited by human effort, but AI enables mass automation. Scammers can generate thousands of unique, tailored phishing messages or profiles in minutes.

• Realism and Quality: AI-generated content bypasses traditional “tells” such as poor spelling and grammar. Sophisticated LLMs produce content that is professionally crafted and grammatically perfect, making it increasingly difficult to distinguish from genuine content.

• Hyper-Personalization: AI algorithms analyze vast amounts of data—scraped from social media and public records to create highly tailored messages that resonate specifically with the target victim or organization.

• Adaptivity: AI systems can learn from victim responses and modify their tactics in real-time, overcoming objections or skepticism during complex interactions.

Scope & Financial Impact of AI-Driven Fraud (2024–2025)

AI-driven fraud is evolving at an alarming pace, making traditional security measures obsolete. The financial and reputational risks to businesses are growing.

The current threat landscape (2024–2025) is characterized by industrial-scale operations and mainstream adoption of AI misuse. The statistics underscore the severity of this crisis:

• Financial Losses: Financial losses from AI-driven scams exceeded $16.6 billion in 2024, representing a 33% increase from the previous year. Total global scam losses reached $1 trillion in 2024.

• Deepfake Surge: Deepfake incidents surged by 700% in the financial sector alone in 2023 compared to the previous year. In North America, deepfake fraud increased by 1,740%.

• Projection: Fraud losses facilitated by generative AI in the U.S. are projected to rise to $40 billion by 2027.

• Prevalence: Over half of finance professionals in the US and UK had been targeted by deepfake-powered financial scams, with 43% admitting that they had fallen victim to these attacks.

Types of AI-Powered Scams

AI-powered scams leverage distinct technological strengths, ranging from impersonation to market manipulation. The most popular AI scams observed in 2025 include:

1. Deepfake and Voice Cloning Scams

Deepfakes use machine learning algorithms to create convincing, lifelike representations of people. This technology is often used for impersonation scams, leveraging both visual and audio manipulation.

• Deepfake Video Scams: Sophisticated AI is used to mimic voices and create convincing video impersonations, making it incredibly challenging for individuals to distinguish real requests from fraudulent ones. The power of deepfakes is evident when seeing a video of a celebrity, or even a loved one, asking directly for money or help.

• Voice Cloning Scams (AI Phone Scams): These schemes use AI-generated voices to mimic real people, such as a loved one or a trusted official. Modern text-to-speech tools utilize deep learning algorithms to better mimic human speech patterns, intonation, and emotion, making it hard to distinguish between a real message and a fake one, especially over a phone line. Scammers often only require minimal audio samples, sometimes as little as 3 seconds, to clone a voice. The objective is to exploit emotional vulnerability, such as faking a kidnapping or an emergency. Alarmingly, 77% of voice clone victims reported financial losses.

2. AI-Generated Phishing and Conversational Phishing Explained

AI has streamlined the creation of astonishingly realistic phishing emails, eliminating the poor grammar and obvious red flags typical of older attempts.

• AI-Generated Phishing: Natural language processing fine-tunes the tone to be highly convincing, creating emails that appear legitimate and trustworthy. These emails are hyper-personalized, often referencing specific activities or imitating internal company communication styles. LLMs enhance phishing success rates, with some reports suggesting that 61% of phishing emails are now AI-generated.

• AI-Powered Conversational Phishing: If a victim responds to a phishing attempt, AI-driven chatbots can seamlessly pick up and maintain the conversation. These bots rapidly parse previous conversations, analyze human communication patterns, and deliver timely, believable responses to make the interaction feel authentic. This approach is designed to bypass traditional email filters and lower the victim’s social defenses before delivering the malicious payload.

3. AI-Powered Romance Scams: Synthetic Personas & Pig Butchering

Dating and romance scams have become more sophisticated and scalable with the aid of AI.

• Synthetic Personas: Fraudsters use generative AI to manage multiple conversations simultaneously, allowing them to easily maintain the illusion of genuine interest and emotional connection across language barriers and over extended periods. Scammers may also use AI-powered face-swapping technology during live video calls.

• Pig Butchering Scams: This is a common case study in romance fraud. Scammers slowly build trust through affectionate interactions, the “fattening up of the pig” before the conversation shifts naturally toward financial advice or fraudulent investment opportunities.

4. AI-Driven Investment Scams: Pump-and-Dump & Market Manipulation

These scams leverage sophisticated algorithms and machine learning to deceive investors on a massive scale, usually targeting cryptocurrency and stock trading.

• Misinformation and Manipulation: Scammers use AI to create fake social media profiles, forums, and websites that spread convincing misinformation about investment opportunities. AI can also manipulate stock prices through astroturfing, which involves creating a false appearance of grassroots support (false hype or fear) using thousands of fake accounts to trick real investors.

• Pump-and-Dump Schemes: A common example involves selecting a low-liquidity cryptocurrency, launching an AI-driven astroturfing campaign (the pump phase) to generate false hype, and employing AI-driven trading bots to simulate high trading volume. Once the price peaks, scammers sell their holdings (the dump phase), causing the price to crash and leaving real investors with worthless cryptocurrency.

• Quantum AI Scams: These are scams that trick people into investing in fake quantum computing technologies, promising enormous returns by leveraging buzzwords like “quantum computing” and “AI” to create a false sense of legitimacy.

5. Synthetic Identity Fraud: How Fraudsters Create “Frankenstein IDs”

This is an emerging scam technique involving the creation of fake identities (sometimes called “Frankenstein IDs”) by combining real personal information—like a Social Security number (SSN)—with realistic AI-generated fabricated details, such as fake names, addresses, or dates of birth.

• Objective: Scammers use these synthetic identities to bypass banks’ application and verification processes, opening accounts, applying for credit, or committing financial crimes with no intention of repaying the loans. This is the fastest-growing financial crime in the United States. Losses due to synthetic identity fraud could reach at least $23 billion by 2030.

6. AI-Enhanced Business Email Compromise (BEC)

BEC involves using compromised corporate accounts to trick employees or partners into making fraudulent payments. AI significantly increases the effectiveness of this scam type.

• Invoice Swapper Attacks: Attackers gain access to legitimate business email accounts and use AI-powered tools to scan communications for invoice details. They intercept legitimate invoices, modify the bank account details, and reinsert the manipulated invoices into the email thread, directing payments to accounts controlled by the scammers.

Real-World AI Scam Cases (2024–2025)

The rapid evolution of AI-powered scams is best understood through high-profile incidents that demonstrate unprecedented financial impact and technical sophistication.

The Hong Kong Deepfake Heist (Arup Incident)

• Date: February 2024.

• Victim: A finance clerk at a Hong Kong branch of a multinational corporation (later identified as the engineering firm Arup).

• Loss: The clerk was tricked into authorizing multiple transfers totaling over $25 million (HK$200 million).

• Attack Mechanism: Scammers compiled public video and audio footage of senior executives to train deepfake models. They then organized an elaborate video conference with the clerk that featured multiple deepfake executives, including the Chief Financial Officer (CFO). The employee reported that both the live images and voices seemed real and recognizable.

• Lesson Learned: This case underscored the severe financial risk of real-time deepfake video calls targeting organizational hierarchies and approval processes. Organizations must establish verification protocols for all video/voice communications from executives.

UAE Corporate Voice Cloning Attack

• Date: 2024.

• Victim: A corporate entity in the UAE.

• Loss: The scammers orchestrated a massive $51 million heist.

• Attack Mechanism: Cybercriminals cloned the voice of a company director using publicly available audio samples. They then used the cloned voice to conduct phone-based authorization of large financial transfers.

• Lesson Learned: Voice cloning, a relatively easy-to-execute fraud method, can result in staggering corporate losses. This highlights the necessity for multi-factor authentication and dual authorization for large financial transactions.

The UK Energy Firm CEO Fraud (Historical Context)

• Date: 2019.

• Victim: A UK energy firm.

• Loss: The firm lost €220,000 (approximately $243,000).

• Attack Mechanism: Fraudsters used a voice clone of the CEO to manipulate a subsidiary’s leader into wiring funds.

• Historical Significance: This incident marked the beginning of voice cloning as a viable and highly effective attack vector for corporate fraud.

Individual and Family-Targeted Scams

• US Virtual Kidnapping Scam (2024): A US family lost $15,000 after receiving a call where scammers used a realistic deepfake of their teenager’s voice, complete with crying, claiming a car accident had occurred.

• India Family Emergency Scams (2024): Cases demonstrate how vulnerable populations are targeted. A senior citizen in Delhi was defrauded of 50,000 rupees (~$600) via an AI-cloned child’s voice claiming kidnapping. Another retired government employee in Kerala lost 40,000 rupees to combined deepfake video and voice manipulation.

• Victim Demographics: Statistics show that the age group 60 and older reported losses totaling $1.6 billion from nearly 17,000 complaints in 2024.

Emerging Scammer Tactics

The criminal ecosystem has rapidly adapted, leveraging new technologies and sophisticated strategies in 2024–2025 to increase speed, scale, and evasion capabilities.

Real-Time Deepfake Integration

Scammers are moving beyond pre-recorded fake content to real-time manipulation.

• Live Impersonation: This includes using tools for real-time face-swapping during video calls, enabling impersonation of executives or contacts instantaneously.

• Multi-Person Scams: The Arup case demonstrated the ability to generate multi-person deepfake conferences in a single video call, overwhelming the victim with apparent social proof and authority.

• Voice Modulation: Attackers use live voice modulation to replicate specific accents or speech patterns in real-time.

The Rise of Fraud-as-a-Service Toolchains

The development of sophisticated tools available on dark web markets has resulted in a Fraud-as-a-Service model that lowers the entry barrier for cybercriminals.

• Malicious LLMs: Generative AI models have been jailbroken or specialized for crime. WormGPT and FraudGPT are examples of malicious Large Language Models used specifically for generating convincing phishing emails, business compromise messages, and malicious code.

• Synthetic Document Creation: Services like OnlyFake facilitate synthetic identity fraud by generating realistic fake IDs, passports, and utility bills for minimal cost (as little as $15).

• Automated Targeting: Tools are being used to automate reconnaissance, scraping personal data, voice samples, and video content from social media platforms to identify high-value targets based on psychological profiling.

Enhanced Evasion and Adaptivity

Scammers are using AI to make their attacks harder to trace and detect.

• Adversarial AI: Threat actors are employing adversarial machine learning techniques to create synthetic content specifically designed to bypass current AI detection systems and filters. This creates a continuous arms race between attackers and defenders.

• Adaptive Social Engineering: AI systems continuously monitor the victim’s responses and objections during a scam interaction. They can rapidly adjust the narrative, tone, and requests to maintain believability and push the victim toward the desired action.

Future Risk Scenarios

Future projections indicate that scams will become even more immersive and autonomous.

• Autonomous Scam Campaigns: Self-directing AI agents may execute complete scam cycles—from identifying targets and generating personalized content to managing complex, long-term interactions—without human intervention.

• Multi-Modal Synthesis: Scams will seamlessly integrate voice, video, and text generation in real-time, enabling completely convincing synthetic interactions during extended conversations.

• Behavioral Cloning: AI may eventually replicate decision-making patterns and unique behavioral tics of targets, making impersonation even harder to detect.

Detection

Effective detection of AI-powered scams requires a combination of vigilance, behavioral skepticism, and technical analysis. Individuals and organizations must look for specific red flags that betray the synthetic nature of the communication or the manipulative intent behind the request.

Behavioral and Contextual Red Flags (For Individuals and Organizations)

These indicators apply across emails, phone calls, and video communications:

• Sense of Urgency and Secrecy: Scammers consistently use urgency to pressure victims into making quick decisions, preventing rational thought or independent verification. Be highly suspicious if the caller or sender demands you keep the request a secret.

• Unusual Payment Requests: Requests for money, especially through unconventional and hard-to-trace methods like wire transfers, gift cards, payment apps, or cryptocurrency, are major red flags. These methods make it nearly impossible to get a refund once scammed.

• Out-of-Character Behavior: The speaker or sender is doing something totally out of character, such as making an urgent financial demand or resisting standard verification procedures.

• Inconsistencies and Formal Language: Despite AI refining grammar, the language might still sound overly formal, stilted, or use unusual phrasing, especially when trying to generate emotional or personal content.

Technical Cues for Deepfakes and Voice Cloning

Because AI is not yet perfect, subtle inconsistencies often remain in synthetic media.

• In a Video Call:

◦ Visual Artifacts: Look for jerky or unrealistic movements, shifts in lighting or skin tone, strange or absent blinking, and shadows around the eyes. There may be unnatural boundaries around the face regions or variable resolution in different parts of the image.

◦ Synchronization Issues: Observe for minor lip-sync errors or misalignments between the audio and the mouth movements.

• In a Voice Call or Audio Message:

◦ Speech Patterns: Listen for unnatural rhythm and intonation patterns (prosody detection), emotional flatness, or irregular patterns, particularly the absence of normal breathing sounds.

◦ Digital Artifacts: Check for minor distortions, clipping, or inconsistent background noise that would normally be present in a genuine recording.

• In Emails/Links: Always check the sender’s address for subtle variations or misspellings. Hover over the link (do not click!) to preview the destination URL and check if the website name is plausible.

Organizational and Technical Detection

Organizations should utilize technology specifically designed to combat AI fraud.

• AI Detection Tools: Deploying AI-powered detection systems is crucial to identify synthetic media, often using forensic analysis on video and audio files.

• Behavioral Biometrics: Implementing biometric verification, such as voice print analysis combined with liveness detection (checking if the media is a live stream or pre-recorded synthetic media).

• Metadata Verification: Analyzing file properties, such as creation timestamps and network pathway information, can help track the origin of suspicious content.

Response

If an individual or an organization suspects they have been targeted by or have fallen victim to an AI-powered scam, immediate action is critical to mitigate harm.

4 Immediate Actions (0–4 Hours)

1. Stop All Activity: Immediately cease all communication with the suspected scammer and do not transfer any funds or provide further information.

2. Document Everything: Collect and preserve all evidence, including emails, text messages, recordings, and screenshots. Do not delete any communications.

3. Secure Your Accounts: Immediately change all passwords for any potentially compromised accounts, especially email and financial services. Enable or strengthen Multi-Factor Authentication (MFA).

4. Verify Independently: If the scam involves an impersonated family member or executive, contact the real person or organization through a known, trusted method (e.g., calling their official number) to confirm the legitimacy of the emergency.

Financial and Credit Actions

1. Notify Financial Institutions: Contact your banks, credit card companies, and insurance providers immediately to place alerts on accounts and potentially freeze transactions.

2. Place Fraud Alerts: Place fraud alerts on your credit reports with the major credit bureaus (Equifax, Experian, TransUnion). For a more proactive approach, individuals should consider freezing their credit at all three bureaus.

3. Initiate Recovery: Work with financial institutions to dispute fraudulent transactions and initiate reversal procedures.

Reporting the Incident

1. Report to Authorities: File a report with local law enforcement and the FBI’s Internet Crime Complaint Center (IC3), particularly for significant losses. You can also report the scam online to the Federal Trade Commission (FTC).

2. Notify Employer (If Applicable): If the scam was work-related, immediately alert key personnel, including the security team, finance department, and executive leadership. Organizational response teams should isolate affected systems and freeze related transactions immediately.

Prevention Strategies

Combating AI-powered scams requires a layered defense involving technical controls, organizational policy changes, and universal education.

For Individuals: Practical Steps and Digital Hygiene

Individual action is the first and most critical line of defense.

• Establish Verification Protocols: Never assume a call, email, text message, or video clip is genuine. Always verify unexpected requests especially those involving money or sensitive information through an alternative, trusted communication channel. For families, agree on a pre-determined “safe word” or specific, non-public verification questions to confirm identity during alleged emergencies.

• Practice Skepticism: Pause before taking action, stop, and think rationally. Urgency is a massive red flag take control of the situation by pausing.

• Limit Digital Footprint: Be wary of what you share on social media. Limit posting personal information, photos, and voice clips on public platforms, as these are used to train AI models for deepfake creation. Make sure your social media accounts are private.

• Technical Defense Toolkit:

◦ Use strong and unique login credentials.

◦ Mandate Multi-Factor Authentication (MFA), especially on financial and email accounts.

◦ Ensure your devices are protected by keeping anti-virus operating software and applications up to date.

◦ Avoid clicking on links or opening attachments in unsolicited messages. Instead, navigate to the claimed website by directly entering the official web address in your browser.

• Stay Informed: Knowledge is the best defense. Educate yourself regularly on the latest AI scams and security measures.

For Organizations: Technical Controls and Policy Enhancements

Businesses face growing financial risks and must adapt their security posture using an identity-first approach.

• Financial Transaction Protocols:

◦ Implement Dual Authorization: Require multiple approvers for all large financial transactions.

◦ Mandate Out-of-Band Verification: Require mandatory callback procedures to known, verified phone numbers to confirm financial requests or changes to payment instructions, especially for requests coming from executives or via email.

• Technical Safeguards:

◦ Deploy AI-Powered Detection Tools: Utilize specialized systems to identify synthetic media and deepfakes.

◦ Implement Biometric Verification: Use voice print analysis combined with liveness detection for high-risk voice verification systems.

◦ Utilize MFA: Make phishing-resistant MFA mandatory for access to all financial systems and sensitive data.

• Training and Culture: Conduct regular, AI-specific security awareness training that includes simulation exercises to expose employees to realistic deepfake scenarios. Cultivate a culture of psychological safety where employees are encouraged to question unusual or urgent requests without fear of reprisal.

For Policymakers and Industry: Standardization and Regulation

The legal and regulatory landscape must evolve rapidly to keep pace with AI misuse.

• Mandatory Content Transparency: Develop and implement technical standards for media watermarking (authentication markers) and provenance metadata for authentic digital content. Regulators should mandate the disclosure or labeling of AI-generated content.

• Accelerate Legal Frameworks: Modernize fraud and impersonation laws to explicitly cover AI-generated deepfakes and synthetic media, closing current enforcement gaps. The EU AI Act, which mandates transparency and outlaws the worst cases of AI-based identity manipulation, serves as an example.

• Foster International Cooperation: Establish treaties and agreements for cross-border enforcement, intelligence sharing, and prosecution of AI-powered crimes.

• Support Defense Research: Fund research and development for advanced detection technologies and forensic tools for identifying AI-generated content.

• Victim Support: Develop victim recovery mechanisms and financial recovery frameworks to support individuals harmed by these sophisticated scams.

Conclusion & Key Takeaways

AI-powered scams represent a fundamental shift in the fraud landscape, enabling attacks of unprecedented scale, sophistication, and effectiveness by combining automated efficiency with human-like credibility. This is not a future concern but a current crisis. The democratization of AI tools has lowered the barrier to entry for cybercrime, allowing actors to generate hyper-personalized content such as voice clones, deepfake videos, and realistic phishing emails that bypass traditional detection mechanisms.

The key statistics are alarming: AI-driven scams accounted for over $16.6 billion in losses in 2024, deepfake incidents in the financial sector surged by 700%, and future losses are projected to reach $40 billion by 2027.

Key Takeaways for Defense

Success in combating this evolving threat requires a coordinated, multi-layered defense strategy.

• Trust but Verify (Individuals): The most essential individual defense is to verify all unusual requests through an independent, known contact channel. Personal protocols, like using a family safe word, are crucial safeguards against emotional manipulation via voice cloning.

• Technical Controls (Organizations): Organizations must implement Multi-Factor Authentication and enforce strict payment protocols, especially Dual Authorization and Out-of-Band Verification for all significant transactions, to protect against executive impersonation.

• Transparency (Policymakers): The defense-in-depth approach must be supported by policy requiring the watermarking and labeling of synthetic media to maintain digital trust and aid forensic investigation.

Future Outlook

As generative AI advances, scams are expected to become more automated and multi-modal, making the distinction between real and fake communications nearly impossible without technical aid.

Threats include autonomous scam agents, increased synthetic identity fraud (projected $23 billion in losses by 2030), and sophisticated adversarial AI techniques designed specifically to evade detection systems.

The fight against AI-powered scams is a continuous arms race, demanding ongoing adaptation, investment, and cooperation across technical, legal, and educational domains.

FAQs for AI Powered Scams

- How Do AI-Powered Scams Use Social Media Data to Create Deepfake Attacks?

Scammers scrape public photos, voice clips, and personal details from Facebook, Instagram, and LinkedIn to train deepfake and voice-cloning AI models. They then send hyper-personalized phishing messages or video calls referencing recent posts to build trust before defrauding targets.

- Can Hackers Weaponize Alexa and Google Assistant with AI Voice Skills?

Yes. Cybercriminals develop malicious “skills” or spoof system voices in smart speakers (Alexa, Google Home) to intercept voice commands, phish sensitive data, or trigger unauthorized payments by mimicking trusted services’ voices.

- Which Browser Extensions and Mobile Apps Detect AI Deepfakes in Real Time?

Tools like DeepGuard Browser and FaceShield Mobile use on-device machine learning to scan videos for lip-sync errors, facial artifacts, and unnatural lighting. While still evolving, these apps offer proactive deepfake detection when browsing social media or joining video calls.

- How Can Small Businesses Prevent AI-Generated Phishing Without a Big Budget?

Implement simple multi-step payment approvals, rotate vendor contact lists quarterly, and use free deepfake-detection scripts (e.g., OpenFace) during tabletop security drills. These low-cost measures reduce risk without expensive cybersecurity investments.

- Do Cyber Insurance Policies Cover AI-Powered Deepfake and Voice-Cloning Fraud?

Most standard cyber policies lack explicit coverage for AI scams. Businesses should review language around “social engineering” and “impersonation” fraud, and purchase endorsements that specifically include deepfake video and voice-cloning attack coverage.

- How Do Free Generative AI Chatbots Fuel More Convincing Phishing Campaigns?

Scammers leverage free LLM platforms (e.g., ChatGPT, Bard) with tactical prompts to craft highly personalized phishing emails, subject lines, and chatbot flows. This rapid content generation boosts phishing click-through rates and evades email filters.

- Why Are DeFi Protocols Vulnerable to AI-Driven Rug Pull Investment Scams?

AI-powered hype bots flood Crypto Twitter and Telegram with fake endorsements and market data, artificially inflating a token’s price. Once investors buy in, scammers—often anonymous—drain liquidity pools, leaving victims with worthless tokens on decentralized exchanges.

- Can AI-Generated Audio Bypass Voice-Print Biometric Security Systems?

Early voice-print systems relying solely on spectral analysis can be spoofed by high-fidelity AI clones. Stronger defenses combine voice biometrics with liveness checks (e.g., challenge-response) and behavioral biometrics like typing rhythm to block fake audio.

- How Are AI Voice-Cloning Scams Driving a Surge in Elder Financial Abuse?

Law enforcement reports a 40% increase in senior citizen losses due to AI phone scams. Scammers impersonate grandchildren or officials in real time, exploiting seniors’ trust and unfamiliarity with AI threats—highlighting the need for caregiver education and verification protocols.

- Where Can Victims of AI Scams Find Help and Recovery Resources Online?

Victims can report incidents to the FBI IC3 and FTC, then seek support from nonprofit hotlines like the Electronic Frontier Foundation’s anti-phishing team. Online communities (Reddit’s r/Scams, r/Deepfakes) offer peer guidance on evidence collection, legal referrals, and emotional support.