Historical Evolution and Milestones

Types and Applications of Deepfakes

Global Statistics and Economic Impact

1. Core Tactics and Types of Scams

1. Timeline from Origins to Recent Trends

2. Enabling Factors for Growth

Notable Examples and Case Studies

1. Prevalence and Growth Rates

4. Detection Effectiveness Metrics

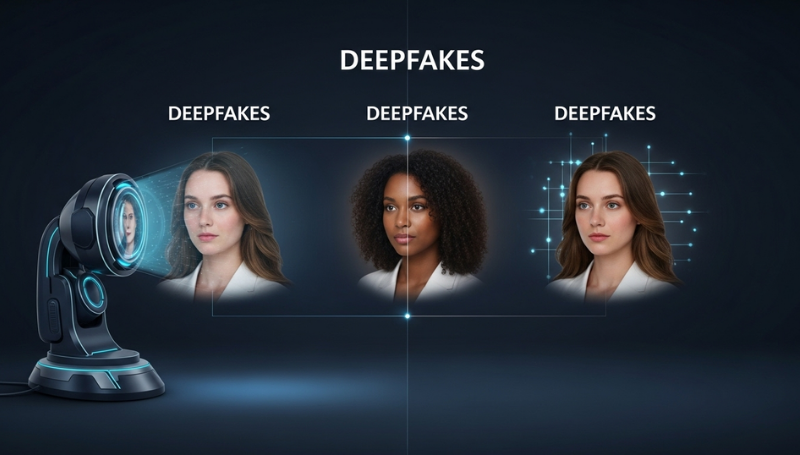

1. Red Flags and Detection Signs

1. Global Cooperation and Enforcement

2. Country-Specific Legislative Actions

3. Challenges and Future Outlook

Introduction to Deepfakes

Deepfake technology represents one of the most sophisticated and rapidly evolving cybersecurity threats of the 21st century. A deepfake is a piece of media created or manipulated by artificial intelligence (AI) to generate realistic but fabricated audio, video, or image content, making people appear to say or do things they never actually did. The term combines “deep learning” and “fake”.

Technology Behind Deepfakes

Deepfakes rely on sophisticated neural network architectures:

- Generative Adversarial Networks (GANs): This revolutionary AI architecture, introduced by Ian Goodfellow in 2014, is foundational to deepfake technology. GANs employ two neural networks in an adversarial relationship:

- Generator Network: Creates synthetic content by learning patterns from training data.

- Discriminator Network: Evaluates content authenticity, distinguishing real from fake media. This adversarial process continuously improves both networks, leading to highly convincing synthetic media when the discriminator can no longer find inconsistencies.

- Autoencoder Architecture: This method utilizes an Encoder which compresses input images into a lower-dimensional latent space, and a Decoder which reconstructs images from latent representations. This architecture is critical for face swapping by enabling the imposition of target characteristics onto source material.

- Other Components: Deepfakes also utilize Convolutional Neural Networks (CNNs) for processing image data and manipulating features like facial landmarks, and Recurrent Neural Networks (RNNs) and Transformers for handling temporal sequences in audio synthesis.

Historical Evolution and Milestones

| Year(s) | Milestone | Description |

| 2014 | Academic Origins | Ian Goodfellow introduces GANs, laying the groundwork for generative models. |

| 2017 | The Genesis | A Reddit user creates the r/deepfakes subreddit, demonstrating autoencoder-based face-swapping and democratizing synthetic media creation. |

| 2018 | Mainstream Awareness | BuzzFeed releases a viral video featuring a deepfake of President Barack Obama, educating the public about the technology’s capabilities and risks. |

| 2019-2021 | Technological Advancement | DeepFaceLab gains prominence, reportedly used to create over 95% of deepfake videos, improving quality and accessibility. |

| 2022-2025 | Weaponization and Commercialization | Deepfake technology transitions from novelty to criminal exploitation. Platforms like ElevenLabs and Synthesia commercialize creation, while criminals weaponize it for fraud. |

The COVID-19 pandemic accelerated digital transformation, inadvertently facilitating deepfake fraud by normalizing video conferencing and reducing in-person verification opportunities.

Types and Applications of Deepfakes

Deepfakes exist in several forms, serving both legitimate and malicious purposes:

| Type of Deepfake | Description | Malicious Use Example | Legitimate Application Example |

| Video Deepfakes | Most sophisticated form, including face swapping, face reenactment, and lip syncing. | Impersonating executives in video calls for fund transfers. | Movie visual effects and digital de-aging of actors. |

| Audio Deepfakes | Voice cloning and speech synthesis, often requiring as little as 3 seconds of source audio. | Emergency/family distress scams (“grandparent scams”). | Communication aids for speech impairments or multilingual corporate messaging. |

| Image Deepfakes | Static face swaps or age regression/progression. | Creating fake dating profiles (romance scams). | Recreations of historical figures for educational content. |

Beyond scams, deepfakes are maliciously exploited for political misinformation (e.g., fabricated speeches), non-consensual pornography (96% of deepfakes in 2019), and corporate espionage.

Global Statistics and Economic Impact

Deepfake scams have followed an exponential trajectory, with global fraud losses reaching $3.2 billion in 2025 and a 320-fold increase since 2017.

- Prevalence: The number of detected deepfake videos grew from 7,964 in 2017 to 95,820 in 2023 (a 550% increase since 2019). The projection for 2025 is 175,000 videos.

- Economic Losses: Financial losses from deepfake-enabled fraud exceeded $200 million during the first quarter of 2025. Global deepfake-related fraud losses reached $410 million in the first half of 2025, surpassing all previous years combined. Losses fueled by generative AI are projected to reach $40 billion by 2027.

- Business Impact: In 2024, businesses faced an average loss of nearly $500,000 due to deepfake-related fraud, with large enterprises losing up to $680,000. Financial institutions have seen a 2137% increase in deepfake fraud attempts in the last three years.

- Regional Growth Patterns (2022-2023):

- North America: 1,740% increase

- Asia-Pacific: 1,530% increase

- Europe: 780% increase

Deepfake Scams Overview

Deepfake scams are a sophisticated evolution of traditional fraud, leveraging AI-generated synthetic media to exploit human trust and bypass conventional security measures. They fundamentally differ from traditional scams by creating convincing audio-visual evidence that victims perceive as authentic.

Core Tactics and Types of Scams

Scammers exploit psychological vulnerabilities through audiovisual authenticity and real-time interaction.

| Scam Type | Description | Financial Impact/Details |

| CEO Fraud and BEC | Impersonating corporate executives (often CFOs or CEOs) to authorize unauthorized fund transfers. | Most financially devastating. Average losses per incident reach $630,000 in financial services. |

| Investment and Crypto Scams | Using deepfakes of high-profile figures (like Elon Musk) to promote fraudulent investment schemes and celebrity endorsements. | Musk’s likeness appears in approximately 90% of cryptocurrency-related deepfake fraud. Victims, often elderly, lose an average of $690,000 per incident. |

| Romance Scams | Creating convincing fake personas using AI-generated profile images and synthetic video calls to build trust for financial extraction. | Hong Kong authorities reported romance scam losses exceeding $46 million attributed to deepfake technology. |

| Emergency/Family Impersonation | Using voice cloning (often called “grandparent scams”) to impersonate family members in distress to trigger immediate financial assistance. | Exploits emotional manipulation and urgency against elderly targets. |

| Extortion | Threatening victims with fabricated compromising media. | Thai police officer impersonation case (2022) used video deepfakes for extortion. |

Deepfake Scam Lifecycle

Deepfake scams generally follow a systematic operational pattern:

- Target Identification: Criminals select high-value targets with accessible media samples.

- Data Collection: Gathering photos, videos, and audio recordings from social media and public sources (Data Preprocessing and Feature Extraction).

- Synthetic Media Creation: Generating deepfakes using specialized AI tools (e.g., DeepFaceLab, ElevenLabs).

- Initial Contact and Trust Building: Establishing communication, often using synthetic media to establish credibility and authority over extended periods.

- Execution (Deepfake Deployment): Requesting fund transfers, sensitive information, or credential access, often utilizing voice clones or real-time video deepfakes.

- Evasion: Utilizing anonymization tools (VPNs, cryptocurrency) to avoid detection and prosecution.

Psychological Elements

Deepfake scams exploit fundamental psychological vulnerabilities, creating stress or urgency to impair critical thinking:

- Authority Bias: Impersonating executives or government officials to compel immediate compliance.

- Urgency Creation: Manufacturing time pressure (e.g., fabricated emergencies) to bypass normal verification procedures and prevent deliberation.

- Emotional Manipulation: Exploiting trust relationships, fear, or greed, particularly effective against elderly targets or in romance scams.

- Confidentiality Demands: Requesting secrecy to prevent victims from consulting with colleagues or family.

History of Deepfake Scams

Timeline from Origins to Recent Trends

The first documented deepfake scams involved crude audio manipulations in the 2019-2020 period.

- Early Audio Fraud (2019): A UK-based energy company’s CEO was allegedly impersonated via an AI-generated voice, resulting in a fraudulent transfer of over $243,000 (€220,000) to a Hungarian supplier.

- Corporate Escalation (2023): Corporate targeting increased significantly, signaled by multiple attempted deepfake attacks against high-profile companies. The proliferation of Elon Musk deepfakes reached critical mass, with Sensity research identifying him as the most commonly impersonated figure for crypto scams.

- The Crisis Year (2024): This year marked a turning point in severity and frequency:

- January 2024: The Hong Kong multinational case resulted in a $25.6 million loss after deepfake video conference participants convinced an employee to authorize 15 fund transfers.

- February 2024: The ARUP engineering firm fell victim to a similar multi-person video scheme, losing $25 million through deepfake CFO impersonation.

- Political Interference: The Joe Biden robocall incident occurred during the 2024 New Hampshire primary, aiming to suppress voter turnout.

- Emerging Patterns (2025): Early 2025 saw financial losses from deepfake fraud exceed $200 million in the first quarter. Coordinated attacks, such as those targeting Italian business leaders using a voice clone of the Italian Defense Minister, demonstrate accelerating sophistication.

Enabling Factors for Growth

The rise of deepfake scams is attributed to several interconnected factors:

- Technological Democratization: The widespread availability of open-source tools like DeepFaceLab (reportedly powering 95% of deepfake creation) and commercial platforms (e.g., ElevenLabs, Resemble AI) has lowered the barrier to entry. Searches for “free voice cloning software” rose 120% between July 2023 and 2024.

- Social Media Proliferation: The abundance of publicly available photos, videos, and audio recordings provides criminals with extensive, high-quality training data necessary for creating convincing synthetic media.

- COVID-19 Acceleration: The pandemic normalized reliance on video conferencing for business and remote work, reducing opportunities for in-person verification and increasing reliance on digital identity.

- Regulatory Lag: The pace of technological advancement has outstripped regulatory responses, creating enforcement challenges due to the absence of specific deepfake legislation in many jurisdictions.

Notable Examples

The following table details 20 documented deepfake scam cases, demonstrating the global scope and variety of the threat:

| Incident Name | Date & Location | Deepfake Method | Scam Type | Financial Loss (USD/Local) | Perpetrator Outcomes | Region |

| Hong Kong Bank Video Call | Jan 2024, Hong Kong | Multi-person video/audio impersonating executives | CEO Fraud | $25,600,000 | Investigation ongoing | Asia |

| ARUP Engineering CFO Scam | Feb 2024, Hong Kong/UK | Multi-person video/audio impersonating CFO | CEO Fraud | $25,000,000 | Investigation ongoing | Asia |

| Elon Musk Crypto Investment | 2023-Ongoing, Global/US | Video deepfakes endorsing fake platforms | Investment Scam | $12,000,000+ | Multiple arrests | Global |

| Singapore PM Crypto Scam | Dec 2023, Singapore | Video deepfakes of PM Lee Hsien Loong | Investment Scam | $46,000,000+ | Investigation ongoing | Asia |

| Brad Pitt Romance Scam | 2024, France | Celebrity impersonation with images/messages | Romance Scam | €830,000 (~$900,000) | Arrested in France | Europe |

| Italian Defense Minister Clone | Early 2025, Italy | Audio clone of Minister Crosetto | Patriotic Fraud/Extortion | €1,000,000+ | Investigation ongoing | Europe |

| German Business Executive Fraud | 2024, Germany | Audio/Video impersonation of CEO | CEO Fraud | €2,300,000+ | Investigation ongoing | Europe |

| UK Multinational CEO | 2024, UK | Audio clone targeting finance team | CEO Fraud | $35,000,000 | Unknown | Europe |

| UK Energy Company CEO | 2019, UK | Audio clone of company CEO | CEO Fraud | €220,000 (~$243,000) | Unknown | Europe |

| Joe Biden Primary Robocall | Jan 2024, US | Audio deepfake discouraging voting | Election Manipulation | N/A (Political impact) | 1 arrest (Consultant charged) | North America |

| Canadian Real Estate Scam | 2024, Canada | Fake video testimonials from investors | Investment Scam | $15,000,000 CAD | Investigation ongoing | North America |

| WPP CEO Impersonation | May 2024, UK | Video/Audio targeting executives | Information Theft/CEO Fraud | $0 (prevented) | Investigation ongoing | Europe |

| Ferrari CEO Voice Clone | Jul 2024, Italy | Audio clone | CEO Fraud | $0 (prevented) | Unknown | Europe |

| China Zoom Call Scam | 2024, China (Shanxi) | Video call with boss deepfake | Identity Fraud/CEO Fraud | $622,000 (or $262K in Shanxi) | Unknown | Asia |

| Japanese Romance Network | 2023-2024, Japan | Video/Audio profile deepfakes | Romance/Investment | $8,000,000+ (¥890 million) | Multiple arrests | Asia |

| UAE Banking Fraud | 2024, UAE | Video/Audio impersonating customers | Banking Fraud | $5,000,000+ | Investigation ongoing | Middle East |

| Australian Family Emergency | 2024, Australia | Voice cloning of family member | Emergency Scam | $450,000 combined | 12 arrests | Oceania |

| Mexican Government Official | 2024, Mexico | Video impersonation | Authority Impersonation | Unknown | Investigation ongoing | North America |

| South African Investment Seminar | 2024, South Africa | Deepfake presenter conducting fake seminars | Investment Scam | 45,000,000 ZAR | Investigation by Hawks unit | Africa |

| Colombian Drug Money Laundering | 2024, Colombia | Government official deepfake authorizations | Identity/Authority Fraud | $31,000,000 USD | DEA joint operation, 12 arrests | South America |

Statistics and Data

The rapid expansion of deepfake threats is quantified by alarming growth figures and significant financial losses across key regions:

Prevalence and Growth Rates

| Metric | Value/Change | Timeframe/Context |

| Deepfake Videos Detected (2017) | 7,964 | Total |

| Deepfake Videos Detected (2023) | 95,820 | 550% increase since 2019 |

| Deepfake Videos Projected (2025) | 175,000 | – |

| Financial Institution Fraud Attempts Growth | 2137% | In the last three years |

| Deepfake Incidents Reported (Q1 2025) | 179 | 19% rise compared to total recorded in 2024 |

Regional Growth Patterns (2022-2023)

| Region | Percentage Increase in Deepfake Cases |

| North America | 1,740% increase |

| Asia-Pacific | 1,530% increase |

| Europe | 780% increase |

| Middle East & Africa | 450% increase |

| Latin America | 410% increase |

Economic Impact and Losses

| Loss Metric | Value | Timeframe/Context |

| Global Fraud Losses | $3.2 billion | Projected 2025 |

| Cumulative Losses | $128 million | 2019-2023 |

| Losses Q1 2025 | Exceeded $200 million | – |

| Projected Losses Fueled by GenAI | $40 billion | By 2027 |

| Average Loss per CEO Fraud Incident | $630,000 | In financial services |

| Average Loss per Investment Scam Incident | $690,000 |

Detection Effectiveness Metrics

| Method | Average Accuracy Rate |

| AI Detection Tools (Sophisticated) | 70-96% (depending on quality) |

| Human Detection (General Population) | 57% |

| Human Detection (Trained Personnel) | 72% |

| Multi-Factor Authentication (MFA) | 95% reduction in successful impersonation attempts |

Detection and Safety

Detecting deepfakes is challenging because AI advances faster than defenses, making individual vigilance and verification key.

Red Flags and Detection Signs

1. Visual Indicators (Video Deepfakes):

- Facial Inconsistencies: Unnatural blinking patterns (irregular or absent), unnatural smoothness of skin texture, or the absence of subtle micro-expressions.

- Technical Artifacts: Lighting and shadow mismatches (light does not match the environment), blurring or distortion around hairlines and facial boundaries, and resolution mismatches between the face and the background.

2. Audio Detection Methods:

- Vocal Pattern Analysis: Robotic or synthetic quality, unnatural rhythm, stress, or intonation (prosody inconsistencies), or unnatural breathing patterns.

- Lip-Sync Discrepancies: Misalignment between mouth movements and the audio content.

3. Contextual and Behavioral Red Flags:

- Urgency and Secrecy: Unusual pressure for immediate action or demands for confidentiality.

- Request Anomalies: Unexpected requests for fund transfers or credential access, or attempts to bypass normal verification procedures.

- Communication Channel Changes: A sudden switch to unfamiliar communication methods.

Prevention Strategies

4. Technical Measures:

- Multi-Factor Authentication (MFA): Organizations and individuals should implement robust MFA combining multiple verification methods (biometrics, tokens, device recognition) to create obstacles for fraudsters.

- Deepfake Detection Tools: Several tools offer detection capabilities, including Intel FakeCatcher (96% accuracy), Microsoft Video Authenticator (84% accuracy), and Deepware Scanner (web-based).

- Advanced Verification: Utilize reverse image search to identify source materials, and metadata analysis to examine file creation data.

5. Behavioral and Organizational Protocols:

- Independent Channel Verification: Always contact the individual or entity through a separate, verified communication channel (e.g., calling a known phone number) to confirm the request.

- Knowledge-Based Questions: Ask specific questions only the real person would know (e.g., about shared memories or personal details).

- Safe Word/Code Word: Families should agree on a pre-determined “safe word” or secret code for emergency situations to ensure legitimacy during distress calls.

- Multi-Person Authorization: Implement dual or multiple signatory requirements for significant financial transactions within organizations.

- Digital Privacy Management: Limit the public sharing of photos, videos, and voice recordings on social media to minimize the training data available to criminals.

Post-Incident Response

If a deepfake scam is suspected or confirmed, immediate action is crucial:

1. Immediate Assessment: Stop all interaction and financial actions immediately. Ask spontaneous questions or request specific physical gestures during video calls for real-time testing.

2. Security Measures: Change passwords on all potentially compromised accounts and secure systems. Contact banks or financial institutions immediately to freeze accounts or reverse transactions.

3. Evidence Collection: Document and preserve all communication logs, screen recordings, and interaction records for forensic analysis.

4. Reporting: Report incidents to appropriate authorities, such as local police cybercrime units, the FBI Internet Crime Complaint Center (IC3), and financial institution fraud departments.

Government Measures

Governments worldwide are accelerating legislative and cooperative efforts to combat the rapidly evolving deepfake threat, although regulatory speed remains a significant challenge.

Global Cooperation and Enforcement

International cooperation has intensified, with synthetic media fraud identified as a critical component of cybercrime by the UN Office on Drugs and Crime (UNODC). INTERPOL’s Operation Haechi IV addressed deepfake technology threats, resulting in the arrest of approximately 3,500 people and the seizure of $300 million in assets across 34 countries. Europol’s Innovation Lab has developed specialized capabilities for deepfake threat analysis and training for law enforcement.

Country-Specific Legislative Actions

| Jurisdiction | Key Legislation/Regulation | Core Provisions |

| European Union | AI Act (Implemented 2024/2025) | World’s first major regulatory framework. Mandates clear labeling and watermarking for AI-generated content. Classifies high-risk deepfakes (e.g., political manipulation) with strict regulatory requirements and penalties up to €35 million. |

| United States | DEEPFAKES Accountability Act (2023); TAKE IT DOWN Act (2025) | Proposed legislation requiring mandatory disclosure for deepfake content creators and establishing criminal penalties. The TAKE IT DOWN Act criminalizes knowingly publishing non-consensual intimate imagery, including AI-generated deepfakes. Utah AI Policy Act requires disclosure of AI use in consumer transactions. |

| China | Deep Synthesis Regulation | Pioneering policy establishing mandatory explicit labeling and source traceability requirements for all doctored content using deep synthesis technology. Requires service providers to maintain a “correct political direction”. |

| India | IT Rules, 2021 (Enhanced); IT Act, 2000 | Lacks specific deepfake legislation. Requires social media intermediaries to use due diligence and remove deepfakes within a 36-hour timeline upon reporting. Utilizes the IT Act to address cheating by impersonation using computer resources. |

| Denmark | Copyright Law Amendment | Amended copyright law to establish that every person “has the right to their own body, facial features and voice,” creating personal rights protection against deepfake misuse. |

Challenges and Future Outlook

The primary challenge is the regulatory lag, where rapid technological evolution outpaces regulatory development and enforcement.

- Enforcement Difficulties: Jurisdictional challenges in cross-border cybercrime, the technical complexity of evidence collection, and the use of anonymization technology complicate perpetrator identification.

- Future Trends: Experts anticipate strengthening international cooperation through bilateral and multilateral treaties and the harmonization of legal definitions. Future regulatory approaches emphasize real-time detection technology mandates and platform liability for synthetic media distribution.

Conclusion

Deepfake scams represent a fundamental shift in the cybersecurity threat landscape, combining sophisticated AI technology with traditional social engineering to create unprecedented risks.

Key Takeaways:

- Scale and Impact: Global deepfake fraud losses are projected to reach $3.2 billion in 2025. The threat is growing exponentially, evidenced by a 1,740% increase in deepfake cases in North America (2022-2023) and a 2137% rise in deepfake fraud attempts against financial institutions in the last three years.

- Vulnerability: The democratization of AI tools has made deepfake creation highly accessible, with criminals needing as little as three seconds of audio to create a voice clone. Contemporary deepfakes are highly effective, while human detection capabilities are low (57% accuracy in the general population).

- High-Value Targeting: CEO fraud and business email compromise remain the most financially devastating applications, with the Hong Kong multinational and ARUP cases demonstrating losses of $25 million or more from single incidents of video call impersonation.

- Necessity of Verification: The successful prevention of several high-profile attempts (e.g., Ferrari, WPP) demonstrates the effectiveness of non-technological defenses, specifically knowledge-based authentication and clear verification protocols.

Recommendations for Stakeholders:

1. Technical Investment: Organizations must deploy Multi-Factor Authentication systems and integrate AI-powered detection tools into communication systems.

2. Procedural Safeguards: Implement rigorous verification protocols, such as mandatory independent channel verification and multi-person authorization for financial transactions.

3. Continuous Education: Regular employee training programs must be implemented to identify deepfake indicators and respond to simulated deepfake scenarios.

4. Digital Privacy: Individuals should minimize the public sharing of personal voice, video, and image content to reduce the data available for deepfake creation.

The threat will likely intensify before effective global countermeasures mature. Success requires coordinated efforts emphasizing prevention, detection, and response capabilities that continuously evolve alongside AI technologies

FAQs for Deepfake Scams

1. What exactly is a deepfake, and how did the term originate?

A deepfake is AI-manipulated media that creates realistic but fake audio, video, or images, making it seem like someone said or did something they didn’t. The term blends “deep learning” (an AI technique) with “fake,” and it first gained traction around 2017 on platforms like Reddit.

2. How do Generative Adversarial Networks (GANs) contribute to creating deepfakes?

GANs use two competing neural networks: a generator that produces fake content and a discriminator that checks for authenticity. Through this rivalry, the generator improves until the fakes are highly convincing, forming the backbone of many deepfake tools since their introduction in 2014.

3. What role did the COVID-19 pandemic play in the rise of deepfake scams?

The pandemic sped up digital shifts, like widespread video calls, which reduced face-to-face checks and made it easier for scammers to use deepfakes in fraud, such as impersonating executives or family members without raising immediate suspicion.

4. What are the differences between video, audio, and image deepfakes in terms of malicious uses?

Video deepfakes often involve face swaps for executive impersonation in calls leading to fund transfers. Audio deepfakes enable voice cloning for scams like fake family emergencies. Image deepfakes are used in static fakes, such as phony profiles for romance scams exploiting trust for money.

5. Why are elderly individuals particularly vulnerable to deepfake investment scams?

These scams exploit emotional triggers like greed or fear, using deepfakes of figures like Elon Musk to endorse fake crypto schemes. Elderly victims, often less tech-savvy, face average losses of $690,000 per incident due to urgency and authority bias that impair judgment.

6. How has the number of detected deepfake videos changed from 2017 to the projected 2025 figures?

Detected deepfake videos rose from 7,964 in 2017 to 95,820 in 2023, a 550% jump since 2019. Projections for 2025 estimate 175,000 videos, reflecting exponential growth driven by accessible AI tools and their weaponization for fraud.

7. What psychological tactics do deepfake scammers use to manipulate victims?

Scammers leverage authority bias by mimicking bosses or officials, create urgency with fake emergencies, manipulate emotions like fear or greed, and demand secrecy to prevent victims from verifying with others, all to bypass critical thinking.

8. Can humans reliably detect deepfakes, and what tools can help improve accuracy?

General people detect deepfakes only 57% of the time, while trained personnel hit 72%. AI tools like Intel FakeCatcher (96% accuracy) or Microsoft Video Authenticator (84%) boost detection by analyzing artifacts, but combining them with behavioral checks is most effective.

9. What key legislation has the European Union implemented to combat deepfakes?

The EU’s AI Act, rolled out in 2024/2025, requires labeling and watermarking of AI content, classifies high-risk deepfakes (like political fakes) strictly, and imposes penalties up to €35 million to curb misuse in fraud and misinformation.

10. What steps should someone take immediately after suspecting they’ve fallen for a deepfake scam?

Halt all interactions and transactions, verify via a separate channel, change passwords and freeze accounts, gather evidence like logs and recordings, and report to authorities such as local cybercrime units or the FBI’s IC3 for potential recovery and investigation.